What's the first thing you do when deciding what AI model to use to accomplish a task? If you're like me, you probably look at evaluations. Everyone has their favorites: MMLU for world-knowledge, IFEval for following formatting instructions, RULER for long-context, AIME-2025 for math reasoning abilities that are practically useless in 99% of situations... you get the picture.

Of course, there are a lot of concerns about benchmarks. As compute-intensive RL has become the dominant post-training paradigm, we can no longer be sure that a model hasn't been heavily trained on a specific task very similar to any given benchmark. (This differs from the era of pre-training, where benchmarks measured "emergent" abilities that appeared sort-of by accident as a consequence of reading lots of text, and could therefore be expected to generalize better.) And that's not even getting into the weeds on whether benchmarks are measuring something different than what we actually care about (pic very related).

But that's not what I'm here to talk about today. Because as confusing and weird and maybe-problematic as most LLM benchmarks are, they have nothing on what I've seen working on computer-use models over the past few weeks.

SOTA Models. SOTA Models Everywhere.

Something you'll quickly notice if you spend a lot of time absorbing LLM releases from startups, AI labs, and sadly even academics, is that everyone seems to have the best model. Very rarely will you see a blog post or paper introducing a benchmark or model where the author's model (if they have one) isn't on top. And if there is a better model out there, that model will conveniently be omitted from the bar chart. (As they say, never ask a woman her age, a man his salary, or Mistral how their model compares to a similarly-sized Qwen.)

This trend makes it very hard to trust benchmarks, and it's just as bad in the "computer/browser use" vertical. It's an incredibly competitive space: just off the top of my head, I can name BrowserBase, Browser Use, Skyvern, Induction Labs, c/ua, Magnitude, H Company, General Agents (not to be confused with General Agency), and that's on top of the AI lab projects like OpenAI Operator, Claude Computer Use, Google's Project Mariner, and Amazon's Nova Act. Oh, and also there's tons of academic projects like Jedi, GroundUI, SeeClick, and so on. Whew.

Because it's so competitive, there's a lot of pressure to stand out, which usually means demonstrating that your harness-plus-model combo is state of the art on WebVoyager or OSWorld. These incentives are weird, and they produce outcomes that are... also weird. (Surprise!) I think in general, these evaluations provide a highly distorted view of the actual capabilities of these models, and as we'll explore in detail below, you basically should not trust them. If you have the time, you should do your own digging instead.

Case Study: The General Agents "Showdown" Benchmark

My intention is not to single anyone out. I listed a lot of startups above that I think are awesome and doing great work (some of them are my friends!). In particular, General Agents, who I'm going to talk about here, seems to have trained a very fast, capable computer-use agent. But benchmarks are hard. And when borrowing their benchmark for my own evaluations, I got very surprising results for the Qwen model I've been playing around with, which leads me to believe that these benchmarks are extremely sensitive to some combination of prompting, tools, and output format.

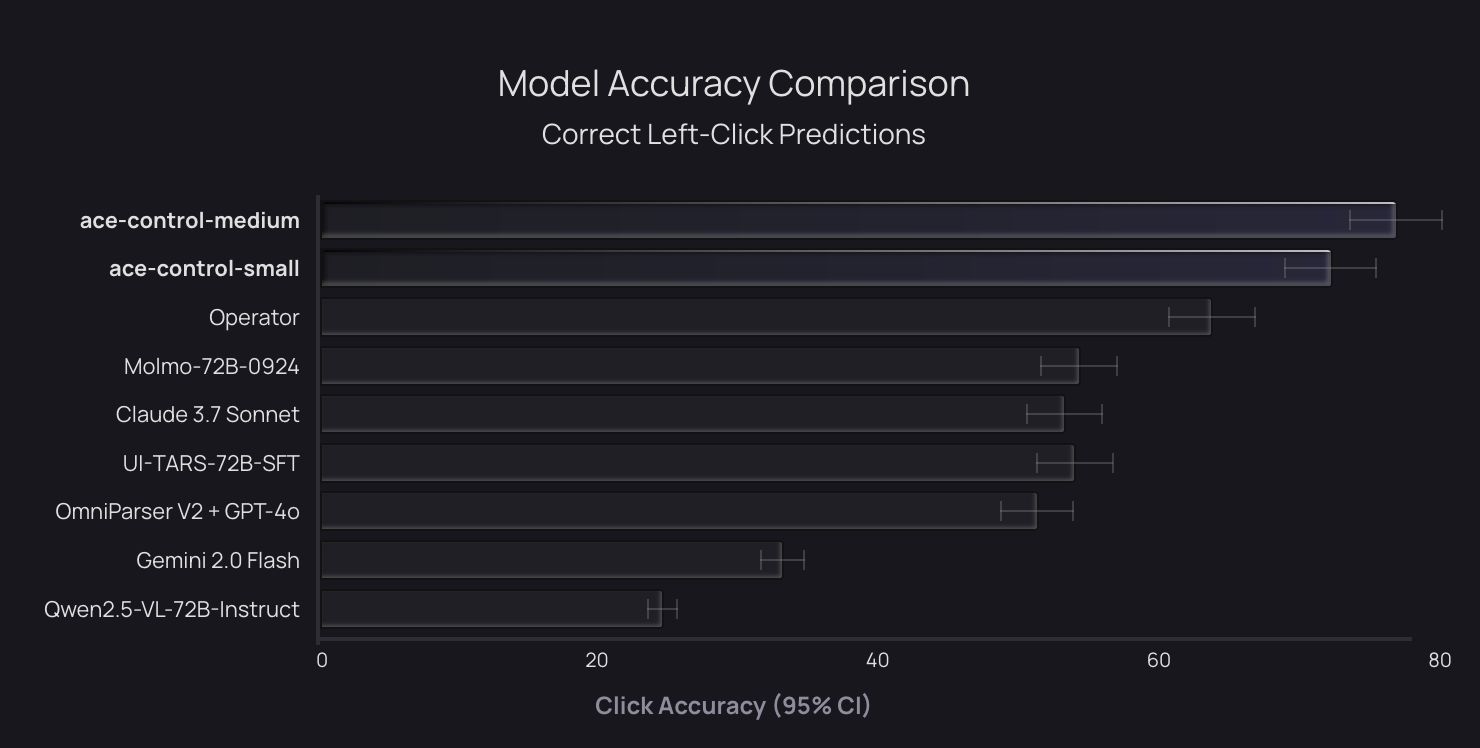

The benchmark in question is called "Showdown Clicks," and it's a collection of around 500 examples, each of which has a UI screenshot, an instruction, and a bounding box. The instruction tells the model what action to perform, the model outputs a "click", and if the model's click is inside the bounding box, it's scored correct. In the chart released by General Agents, the largest Qwen-2.5-VL model scores a measly 20%, while their small and medium models top the chart at a show-stopping 70% and 80%.

My first thought when I saw this was "Damn, this must be a hard benchmark." Models trained to do GUI grounding, like Moondream, the newer Qwen models and Claude, typically achieve 75%-90%+ on GUI-clicking benchmarks like ScreenSpot and WebClick. Even the teeny-tiny 3B version of Qwen-2.5-VL, which I've been playing with for GUI grounding finetuning, starts off getting well over 70%.

As GUI elements get more confusing (calendars!) or instructions get more abstract ("Select the product most evocative of the touch of a scorned lover and add it to my cart"), performance predictably falls. But a benchmark where the 72B Qwen scores a mere 20% must be challenging indeed. So, I thought, I should definitely add it to my list of evals. "If I hill-climb this bad boy, my model will be SOTA in no time." Then, I actually ran the eval, and my teeny-tiny 3B Qwen got 50% correct.

What Happened?

As it turns out, the main difference between my setup and theirs is... drum roll please... the prompt. To General Agents' credit, they didn't do anything insane. You can see for yourself here. It looks like they adapted their prompt from an official Qwen cookbook. It gives the model tons of tools to take different actions, like scrolling, waiting, dragging, typing, of which "clicking" is just one. My setup uses a much simpler prompt, which only lets the model click by responding with XML:

Determine where to click in the UI to complete the instruction/task.

Report the click location in XML like '<points x1="x" y1="y">click</points>.'

Image size [{w}, {h}], (0, 0) is the top-left and ({w}, {h}) is the bottom-right.

[IMAGE]

{instruction}

"You're cheating!", one might protest. "Of course your model does better, you only let it click!" But in my defense, it is a clicking benchmark, and my prompt also isn't insane. I adapted it from the Qwen spatial understanding cookbook, which shows examples of the model using XML. I tweaked it slightly to ask for the exact format the model actually seemed to prefer, and provided the image size just in case that helps. And yes, this did make a big difference for accuracy: XML prompting worked a lot better than trying to force the model to output simple "(x, y)" coordinates.

And, it's not just this benchmark. I see similar results on OSWorld-G, where the published result for Qwen-2.5-VL 3B understates the potential of the model (43% vs. 27%).

Conclusion: Sorry For Being a Hater

So what's the takeaway here? I think it is as follows. Computer-use and GUI grounding models (like many LLMs!) are extremely sensitive to their harness and how they were trained. As a result, some evaluation setups may vastly understate the capabilities of a model. In this case, you would think Qwen-2.5-VL 3B is godawful at clicking, but it's actually pretty good! For practitioners evaluating models, this should be a call to try more prompting setups before concluding that a model is bad at something. As another example, many folks still aren't aware that Gemini is visually-grounded, because it was trained to output bounding boxes and points with [y, x] coordinates instead of [x, y]. (Why? Just why?)

I unfortunately only have criticism today; I do not have an answer for how to fairly compare models that all work better with different prompts, where said prompts are often not documented, poorly documented, or even incorrectly documented. It would be great if model providers did a better job of this, but a lot of the time, even they don't know the best way to use their models; it's up to random Twitter anons to figure out how to prompt them.

And so it shall be forever and ever. Amen.

As always, if you loved this post or if you hated it, come yell at me on Twitter: @andersonbcdefg.