Along with the much-awaited release of Claude 4.5 Opus this month, Anthropic released new tool-calling capabilities ("advanced tool use"), including a "Tool Search Tool" and "Programmatic Tool Use." The Tool Search Tool allows models to search for and discover tools at runtime, rather than dumping them all into the context whether they're needed or not. Programmatic Tool Use allows models to write code to chain and compose tools without having to read and re-write intermediate outputs. These are not new patterns, but it is the first time they've been built into a major AI provider's SDK.

As I said on Twitter, these features are awesome, and the only sad thing about them is that they're only offered by Anthropic. Boo provider lock-in! So, in this post, I'll walk through the process of building an open alternative—I'm calling it Open Tool Composition, or OTC for short.

The Setup

A utility for tool composition has to do a few things:

- (1) Collect "complex" tool calls made by a model and translate them to regular tool calls (e.g. web search, bash, text editor).

- (2) Execute all the simple tool calls.

- (3) Package up the result and send it back.

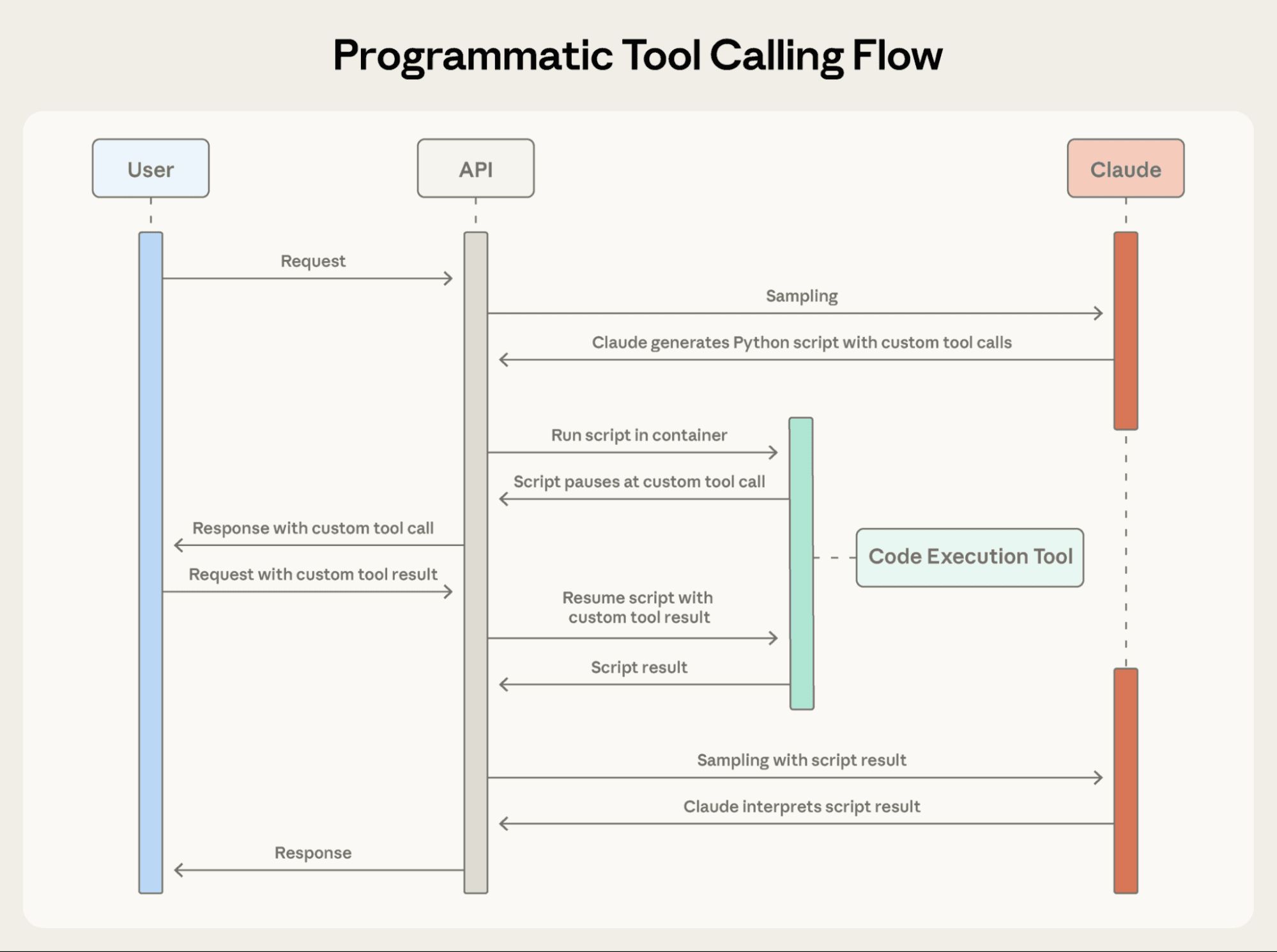

You can think of the "tool composition" layer as a thin proxy between the code that invokes the model API and the AI provider. Anthropic has a nice picture of it here (the tool composition layer is labeled as "API", since Anthropic's API handles it for you.)

But actually implementing this as a proxy is a drag. No one wants to run another server and proxy all their LLM calls through it! Yuck! Instead, I opted to run the translation layer right next to the "user" code invoking the model. To do this, I create a "meta-tool," e.g. compose or tool_search, and expose it to the model. The model calls the meta-tool, the meta-tool calls the regular tools, and the final response is given back to the model. Easy!

Of course, each provider's models do tool calling in a slightly different way, which means both the meta-tools and the regular tools will differ per-provider. You either need separate OTC implementations for OpenAI, Anthropic, and Google, or you need a provider-agnostic SDK. Luckily, I made a cool library called lm-deluge, so I have the latter.

A truly great OTC framework would work with each provider's SDK and not force you to adopt my library. But for now, we'll have to settle for merely good; a first stab to nail down the abstractions. In the rest of this post, I'll demonstrate building the OTC tool as a "prefabricated" lm-deluge tool. You could do the same with your preferred SDK.

Simple Case: The "Batch" Tool

If you've built an agent before, and you haven't built a "batch" tool, you're "ngmi", as the kids say. A batch tool is the simplest case of tool composition, designed for the single purpose of saving model-tool roundtrips. I first saw this as a recommendation from Anthropic to coax models reluctant to make parallel tool calls into making them anyway. I implemented a version for computer use (dubbed the "multi-computer tool"), which offered a substantial latency reduction by letting the model queue up 3-4 actions at once (e.g. click to focus input, type, hit tab to navigate to next input). This shaves off substantial time without sacrificing accuracy. Later, I did this for agentic search, allowing the model to "stagger" search and fetch calls, reading documents from a previous search and queuing up new searches at the same time.

The batch tool only makes sense in the context of other tools; it simply allows the model to provide a list or batch of tool calls, which are parsed into individual calls and executed, before the combined results are returned. In lm-deluge, it looks roughly like this:

class BatchTool:

"""Expose a single tool that runs multiple other tools in one request."""

def __init__(self, tools: list[Tool])

self.tools = tools

self._tool_index = {tool.name: tool for tool in tools}

The batch tool needs to know about the whole "universe" of tools that the model might try to call, so it can execute them when provided. Then, to run it, we just loop over all the model's requested calls, do them one by one, and give back the results:

async def _run(self, calls: list[dict[str, Any]]) -> str:

"""Execute each requested tool and return ordered results as JSON."""

results: list[dict[str, Any]] = []

for call in calls:

tool_name = call.get("tool", "")

arguments = call.get("arguments") or {}

tool = self._tool_index.get(tool_name)

if tool is None:

results.append(

{

"tool": tool_name,

"status": "error",

"error": f"Unknown tool '{tool_name}'",

}

)

continue

output = await tool.acall(**arguments)

results.append({

"tool": tool.name, "status": "ok", "result": output

})

return json.dumps(results)Easy enough, right? The meta-tool just handles the looping, calling, and aggregating; the individual Tool objects already know how to run themselves. Finally, this is packaged up as its own Tool object, which lm-deluge knows how to translate for any AI provider!

return Tool(

name="batch_tool",

description=self._build_description(),

run=self._run,

parameters=parameters,

required=["calls"],

definitions=definitions or None,

)Implementing a Tool-Search Tool

"Tool search" is an abstraction that allows models to dynamically discover tools, instead of loading them all into the context. It's a meta-tool similar in spirit to the batch tool: it knows about a universe of tools, and the model can choose to call any of them. The difference is that the batch tool expects the model to know about the tools up-front, whereas the tool-search tool lets the model discover the tools as needed. This means you could scale to hundreds of tools without polluting the context window with the ones that aren't needed. The initial setup is basically the same:

class ToolSearchTool:

"""Allow a model to discover and invoke tools by searching name/description."""

def __init__(self, tools: list[Tool]):

self.tools = tools

self.max_results_default = 3

self._registry = self._build_registry(tools)The _build_registry method just does housekeeping (assign stable IDs to tools, save them to a dictionary for O(1) lookup). Then, we need to implement search capabilities to let the model discover a tool. Ideally you'd want to do something clever here like BM25; I just did the dumbest possible keyword search.

async def _search(

self, pattern: str, max_results: int | None = None

) -> str:

"""Search tools by regex and return their metadata."""

try:

compiled = re.compile(pattern, re.IGNORECASE)

except re.error as exc:

return json.dumps({"error": f"Invalid regex: {exc}"})

limit = max_results or self.max_results_default

matches: list[dict[str, Any]] = []

for entry in self._registry.values():

if compiled.search(entry["name"]) or compiled.search(entry["description"]):

matches.append(

{

"id": entry["id"],

"name": entry["name"],

"description": entry["description"],

"parameters": entry["parameters"],

"required": entry["required"],

"signature": self._tool_signature(entry),

}

)

if len(matches) >= limit:

break

return json.dumps(matches)Once the model searches for some tools, it needs to be able to run them! Since we don't want to break caching by dumping new tools into the model provider's actual tools parameter, the work-around is to instead invoke the discovered tool(s) indirectly, via ToolSearchTool._call. This literally just looks up the tool by ID and calls it:

async def _call(

self,

tool_id: str,

arguments: dict[str, Any] | None = None,

) -> str:

"""Invoke a matched tool by id."""

entry = self._registry.get(tool_id)

if entry is None:

return json.dumps({"error": f"Unknown tool id '{tool_id}'"})

tool = entry["tool"]

try:

output = await tool.acall(**arguments)

return json.dumps({"tool": tool.name, "tool_id": tool_id, "result": output})

except Exception as exc: # pragma: no cover - defensive

return json.dumps(

{

"tool": tool.name,

"tool_id": tool_id,

"error": f"{type(exc).__name__}: {exc}",

}

)As before, these get packaged up into lm_deluge.Tool objects, which can be passed to models! Ta-da, tool-search tool complete.

OTC: Towards Full Composition

Full tool-composition is a more challenging task. This is what Anthropic calls Programmatic Tool Use, but has also been referred to as "Code Mode" by CloudFlare, and "CodeAct" by Apple. This is the full monty of dynamic tool use, allowing arbitrary composition and chaining of tools. Unfortunately, that also means you have to define a DSL, parse it, and execute it. Fun!

For our DSL, we choose a very, very limited subset of Python, so we can just use the Python interpreter. I won't bore you with the details, I basically just had Claude figure this all out. The lion does not concern himself with AST parsing. It's probably not watertight, but we at least block obvious things like eval, reading and writing files, etc. The code runs in an environment where only basic Python control flow is allowed, plus all Tools are available in the form of Python functions.

The "executor" takes a block of this pseudo-Python written by the model, and runs it line by line, stopping when it hits a block that needs to run a tool to be evaluated. This handles all the glue, like looping over stuff, passing the output of Tool A as the input of Tool B, and so on.

async def execute(self, code: str) -> str:

"""Execute OTC code and return the final output.

The execution model:

1. Parse and validate the code

2. Execute line-by-line, collecting tool calls

3. When we hit a point where results are needed, execute pending calls

4. Continue until done

5. Return captured output or final expression value

"""

tree = validate_code(code, self.tool_names)

# Set up execution environment

pending_calls: list = []

results: dict = {}

output_capture = OutputCapture()

pending_call_ids: set[int] = set()

call_state = {"next_id": 0}

# Build globals

exec_globals = {

"__builtins__": {**SAFE_BUILTINS, "print": output_capture.print},

"json": json, # Allow json for output formatting

**tool_wrappers,

}

exec_locals: dict = {}

max_iterations = 100 # Prevent infinite loops

for _ in range(max_iterations):

# Reset call sequencing and pending tracking for this pass

call_state["next_id"] = 0

pending_call_ids.clear()

try:

exec(compile(tree, "<otc>", "exec"), exec_globals, exec_locals)

# If we get here, execution completed

# Execute any remaining pending calls (though their results won't be used)

await self._execute_pending_calls(pending_calls, results)

pending_call_ids.clear()

break

except Exception as e:

raise OTCExecutionError(f"Execution error: {type(e).__name__}: {e}")

else:

raise OTCExecutionError("Exceeded maximum iterations")

# Get output

output = output_capture.get_output()

# If no print output, try to get the last expression value

if not output and exec_locals:

# Look for a 'result' variable or the last assigned value

if "result" in exec_locals:

result = self._resolve_output_value(exec_locals["result"], results)

if isinstance(result, str):

output = result

else:

output = json.dumps(result, default=str, indent=2)

return output if output else "Composition completed with no output"This allows models to write code like the following:

total = add(5, 7)

product = multiply(total, 3)

result = subtract(product, 4)

# json is provided by the OTC executor environment

print(json.dumps({"answer": result}))This executor is wrapped in a Tool much like the previous ones, and models can provide code like the above as input to the tool. Normally, the model would have to take the result of 5 + 7, and then pass that as the input into a second tool call, meaning it has to read "12" and write "12". This is not so bad for "12", but would be very bad the intermediate result was 1000 lines of text, or a complex JSON item! With tool composition, only the final answer goes back into the model, so tokens are not wasted reading and copying intermediate results.

Conclusion

Tool composition is great! Maybe someday, all the providers will settle on a single way of doing things. Or maybe all of them except Google will go bankrupt and then we can just do it Google's way. Until then, we need utilities like this that make it easy to build powerful software without provider lock-in.

You can use these meta-tools today by installing lm-deluge from pip:

pip install lm-deluge==0.0.81...and then importing them from our collection of "prefab" tools!

from lm_deluge.tool.prefab import BatchTool, ToolSearchTool, ToolComposerAnd if you don't like my tools, now you know how to go make your own. :)